As AI grows beyond cloud data centers, the real intelligence revolution is happening on the devices closest to us.

1. The Era of On-Device Intelligence

The AI industry is witnessing a tectonic shift — from centralized cloud inference to distributed, on-device intelligence.

Meta Platforms, the company behind Facebook, Instagram, and WhatsApp, has now joined hands with Arm Holdings to power this transition. Their multi-year strategic partnership aims to build a unified, power-efficient compute foundation that runs AI models seamlessly from the datacenter to the device.

This collaboration comes as part of Meta’s broader $65 billion annual AI investment plan — an effort to reshape everything from generative AI assistants to augmented-reality systems. By combining Meta’s AI software stack (like PyTorch and Llama) with Arm’s silicon architecture, the partnership promises one thing: scalable, efficient, and accessible AI everywhere.

At NiDA AI, we see this as a defining moment — the beginning of a true edge-intelligence era.

2. Why Meta Chose Arm — The Power Efficiency Race

For years, cloud GPUs have powered most AI workloads. But the carbon cost and latency of this architecture are unsustainable. Every inference in a distant data center burns energy and time — both critical resources at scale.

Enter Arm.

Arm’s designs dominate 99% of mobile and IoT devices worldwide, known for their ultra-low power consumption and scalable compute efficiency. Meta’s decision to partner with Arm signals a clear intent: unify AI compute from data center cores (Neoverse) to edge devices (Cortex, Ethos-U, and Mali).

With this unified design, a large-language model trained in Meta’s data center can later run a compressed version directly on your smartphone or headset — consuming milliwatts instead of megawatts.

3. The On-Device AI Paradigm

“On-device AI” means models that live where the data is generated — not in the cloud.

It’s an evolution toward autonomy and privacy.

Why it matters

-

Low Latency → Real-time response (critical for autonomous systems, safety devices, or AR).

-

Privacy First → Data stays local, reducing exposure risks.

-

Offline Intelligence → Works without network connectivity.

-

Power Efficiency → Reduces bandwidth and cloud compute cost.

Real-World Examples

-

Smartphones running compact Llama variants for personal assistants.

-

Cameras that perform local object recognition before sending metadata to the cloud.

-

Industrial IoT sensors predicting anomalies on-site.

-

NiDA AI’s own prototypes like the Display Inspection System and Sujud Counter Device — both relying on local AI inference for instant decisions.

Meta’s partnership with Arm validates this entire movement — proving that edge intelligence isn’t a niche anymore; it’s the inevitable next phase of AI deployment.

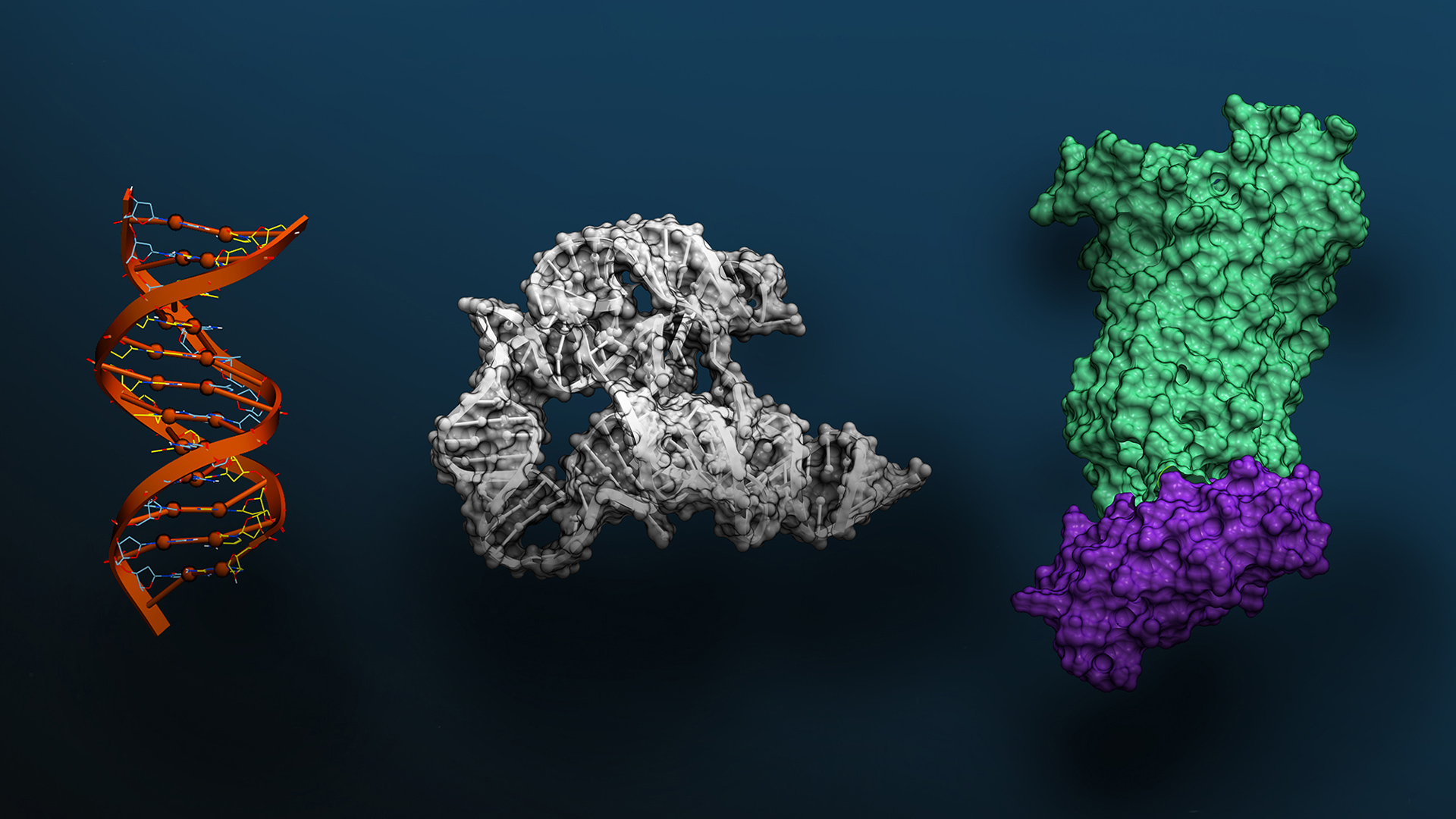

4. Arm’s Architecture — The Engine Behind Edge Intelligence

Arm’s technology portfolio forms the backbone of on-device AI acceleration.

Here’s how each component contributes:

| Compute Layer | Example Hardware | Role in Edge AI | Typical Power Draw |

|---|---|---|---|

| CPU (Neoverse V3) | Data center servers | Task scheduling, model orchestration | 25–50 W |

| GPU (Mali G720) | Mid-tier edge gateways | Visual inference (vision models) | 10–15 W |

| NPU (Ethos-U) | Embedded devices, IoT nodes | AI acceleration for CNN/RNN tasks | < 5 W |

Together, they form a heterogeneous compute fabric — an ecosystem where each core specializes in part of the AI pipeline.

Meta’s AI frameworks such as PyTorch Edge and Llama-Edge will soon be optimized for these processors, enabling developers to run inference natively on Arm-powered hardware.

This democratizes AI development — engineers can now design once, deploy everywhere.

5. The Broader Impact — A New AI Ecosystem

The Meta × Arm collaboration has ripple effects far beyond these two companies.

-

For Developers: unified SDKs and better model portability between server and device.

-

For Startups: lower barrier to entry — no need for expensive GPU clusters.

-

For Enterprises: scalable deployments that respect privacy regulations (GDPR, HIPAA).

-

For the Planet: reduced carbon footprint via energy-efficient compute.

Mark Zuckerberg described this shift as “AI from chip to cloud — an ecosystem that learns globally but acts locally.”

That’s not just a tagline — it’s a roadmap for every AI engineer designing real-world systems.

6. What’s Next — Towards Unified AI Compute

By 2026, analysts predict that 70 % of AI interactions will happen on devices rather than cloud endpoints.

Meta’s integration of Llama models into its own AI Assistant App (launched April 2025) already hints at this shift — multimodal agents capable of text, image, and voice understanding directly on smartphones.

Meanwhile, Arm is expanding its AI PC and IoT roadmap, embedding dedicated NPUs into every compute tier.

This means that the same architecture powering your AR glasses could also drive intelligent cameras, industrial dashboards, or biomedical sensors.

At NiDA AI, we already see this convergence in our Edge-AI Surveillance and Vital-Monitoring products — where every frame and every heartbeat is processed locally, not in the cloud.

The line between “device” and “server” is officially blurring.

7. Conclusion — The Blueprint for Scalable Intelligence

The Meta × Arm alliance represents more than a business deal; it’s a blueprint for how intelligence will scale sustainably across our digital ecosystem.

Meta contributes the AI software DNA — open-source frameworks, massive datasets, and global user reach.

Arm contributes the hardware nervous system — efficient processors, accelerators, and a mature device ecosystem.

Together, they form the missing bridge between training and deployment, between cloud scale and edge presence.

And that bridge is exactly where the future of AI will thrive.

At NiDA AI, we’re building along the same philosophy — Empowering Intelligence at the edge.

Whether it’s a camera predicting anomalies, a device reading vital signs, or a sensor making split-second safety decisions, the goal is the same: make AI think locally, act instantly, and scale globally.

Call to Action

If your enterprise is exploring Edge AI, Industrial Intelligence, or Real-Time Analytics,

connect with NiDA AI to co-architect your next intelligent edge.

📩 www.nidaai.com | ✉️ contact@nidaai.com